Preface

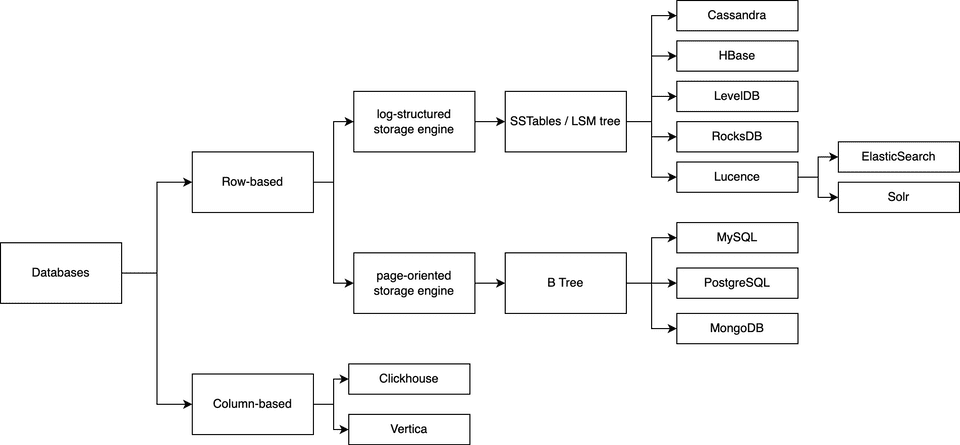

This is the first article of a series to summarize the key concepts of Chapter 3. Storage and Retrieval in the Designing Data Intensive Application book. The series consists of 3 articles, including log-structure storage engine (SSTables and LSM Tree), page-oriented storage engine (B Tree) and column based storage engine.

Why study database storage and retrieval ?

There are so many different types of database available, like MySQL, MongoDB, Cassandra, Clickhouse and etc. While designing our application, we should be able to select proper tool. Besides, Understanding underline core concepts is crucial for us to tune database performance.

What we are going to learn in this article ?

- The underlining core concepts of different data storage structures and retrieval among modern databases.

- The pros and cons of each type of it and when we should use it.

Landscape of database storages

In short, database storages are divided into two categories, row-based and column based. We will talk more about the difference of them in future article. In this article, we will talk more about the log-structure storage under row-based storage. And in next article, we will talk more about page-oriented storage engine.

Log-structured storage engine

High level concept

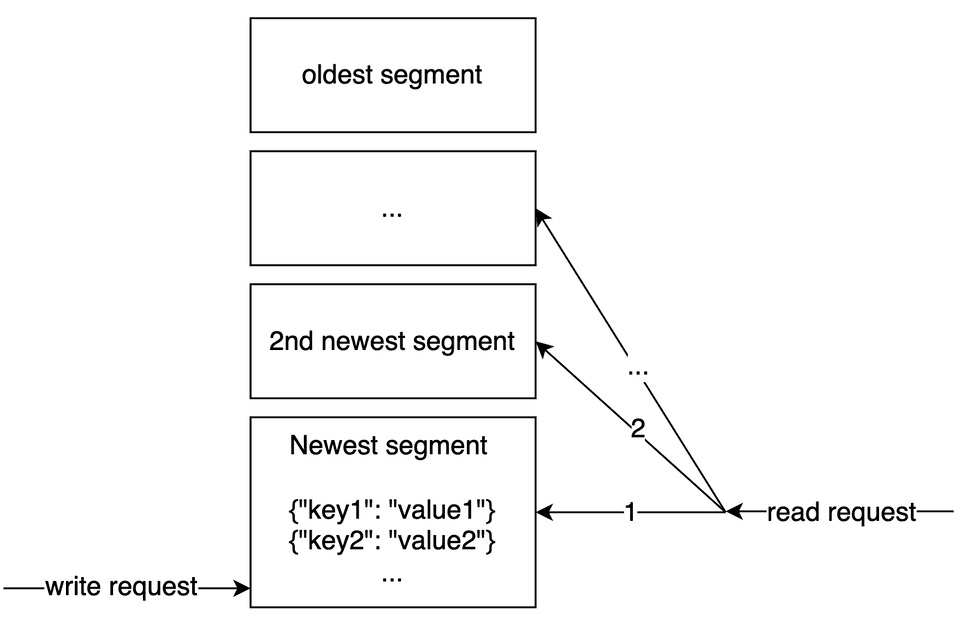

- Every time when there is an new write query, we append the data to the end of file.

- When file size reach certain threshold (e.g. several MB), we append data to another new file. We call each file as “segment”.

- Compact & Merge the files at some points in background process.

Very first version of our own DB

The concept sounds very simple, let’s write a simple version of DB by using shell script.

#!/bin/bash

db_set () {

echo "$1,$2" >> database

}

db_get () {

grep "^$1," database | sed -e "s/^$1,//" | tail -n 1

}$ db_set 123456 '{"name":"London","attractions":["Big Ben","London Eye"]}'

$ db_set 42 '{"name":"San Francisco","attractions":["Golden Gate Bridge"]}'

$ db_get 42

{"name":"San Francisco","attractions":["Golden Gate Bridge"]}It works! Wow, we already write our first very simple database. So, let’s think about pros and cons of this kind of databases.

- Pros: very good write performance.

- Cons: while searching data, we need to search from newest segment, one file after another. The performance could be worse if we search old data.

- Then, how can we improve the read performance ?

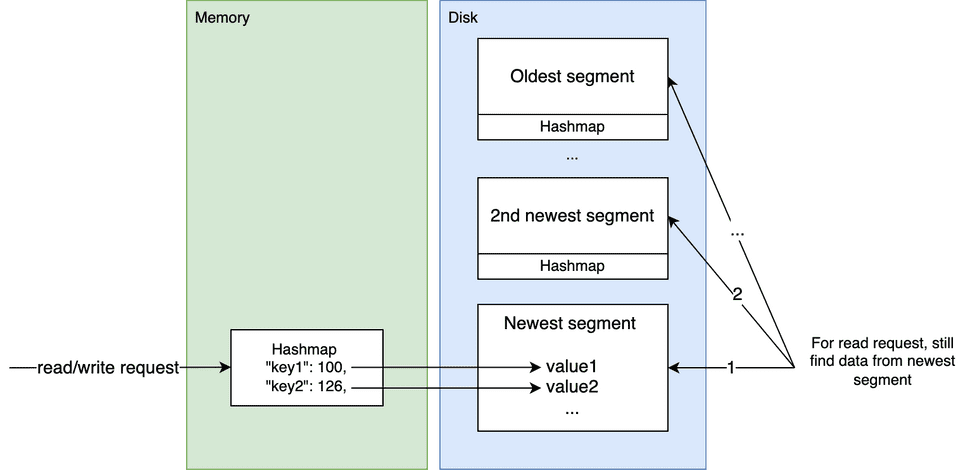

Enhancement: adding index

Now one bottleneck is, we need to search the key one by one in a segment file. The time complexity is O(n). If we ever learned any programming language, we can intuitively think of the hashmap data structure (e.g. dict in python, object in javascript).

If we add a hashmap for each segment, maintain the position of value for each key. Then we can reduce the searching performance around O(log n). This is actually what Bitcask did.

How it works:

-

For write request:

- Update key value in hashmap, append data to log file.

- When file is large enough, write both hashmap & data as file.

-

For read request:

- Load hashmap from newest segment, search whether the key is in hashmap.

- If not found, load next segment, and do the same thing.

Discussions

Okay, let’s stop here for a while. What is the pros and cons of this kind of database ? Can we make it even better ?

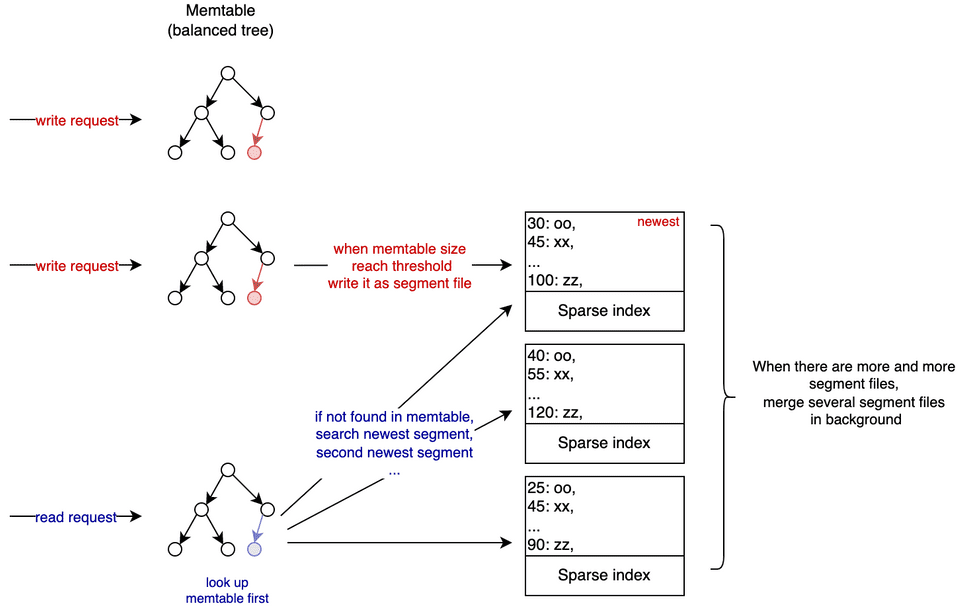

SSTables & LSM Tree

Yes, here comes SSTables and LSM Tree.

Let’s do almost exactly the same logic we mentioned above (log + index), but with a change: the data in each segment file are sorted by keys

The benefits of sorted data in segment file

- Merge several segment files can be very efficient by merge sort algorithm, even for very large file that cannot be loaded into memory.

- Since segment data was sorted, we don’t need to store all key value pairs, we can keep sparse index and do binary search.

How it works

-

For write request:

- Update key value in a balance tree, append data to log file (only for recovery)

- When the tree is large enough, write the data in key order as a segment file

- only need to kept very sparse index for each segment since segment file is sorted

-

For read request:

- try to search it from memtable first

- if not found, search the newest SSTable

- do binary search via sparse index

- extract entire small block from disk into memory, if not found, search next next SSTable

-

Background process:

- merge several SSTables(segment file) by merge sort

-

For index:

- We can have smaller index data stored in disk.

- For example if there are 10,000 key-value pairs in one segment file, we can only sparsely store 100 key-pairs in our index only. Then after doing binary search, we can locate the possible 100 key-value pairs in segment file.

- Because OS load file into memory by fixed size block, there isn’t much difference of I/O performance comparing to only load 1 key-value pair (disk I/O is usually the bottleneck of database performance). And scan 100 key-value in memory can still be very efficient.

- So, to sum up, more sparse index, we can save more disk space, but the searching performance can be worse.

Terms

- SSTables (Sorted String Tables) → the data stored in segment was sorted

- LSM Trees (Log Structured Merge tree) → balanced tree in memory, sorted log file in disk

- Real world databases use the concept of LSM tree & SSTables: Cassandra / LevelDB / HBase

- Full-text search engine: Lucence / Solr / ElasticSearch

Discussions

- What is the potential bottleneck for this kind of database ?

- How can we improve for this design ?

Summary

In this article, we walk through the design of log structure storage engine, and the basic concepts of SSTables & LSM tree. We can know that data was appended to segment file, so in general we can have good write performance. But the read performance, in worst case we have to read every segment file. In order to enhancement read performance, we can add index on segment file, including hash map or balanced tree with sparse index.

We will learn more about page-oriented storage engine, then we can compare the pros and cons of these two categories of databases.